Through your computer, mobile phone, and other digital devices, you leave behind hundreds of digital traces (also called data traces) every day: bits of information about you that are created, stored, and collected.

When your digital traces are put together to create stories about you or profiles of you, these become your digital shadows. These can give others huge insight into your life; and they can also be totally wrong. Either way, once they're out there, they are almost impossible to control....

We leave hundreds of digital traces every day. What are they and how do we create them?

Once digital traces are created, they usually leave our immediate control and land up in the hands of others.

A closer look at the often-made claim that "effective anonymisation is possible".

"I've got nothing to hide"; "It's just the internet"; "But I'm just one in millions".....and four more.

We live in a world currently suffering from an extreme case of Obsessive Collection Disorder. What is actually going on? Where do we fit in? What does all of this mean for us? This section takes a deeper, more in-depth look at things.

Watch this space!

Resources on privacy from other excellent organisations and individuals:

Talk: The Hidden Battles to Collect Your Data and Control Your World

- NDC 2015

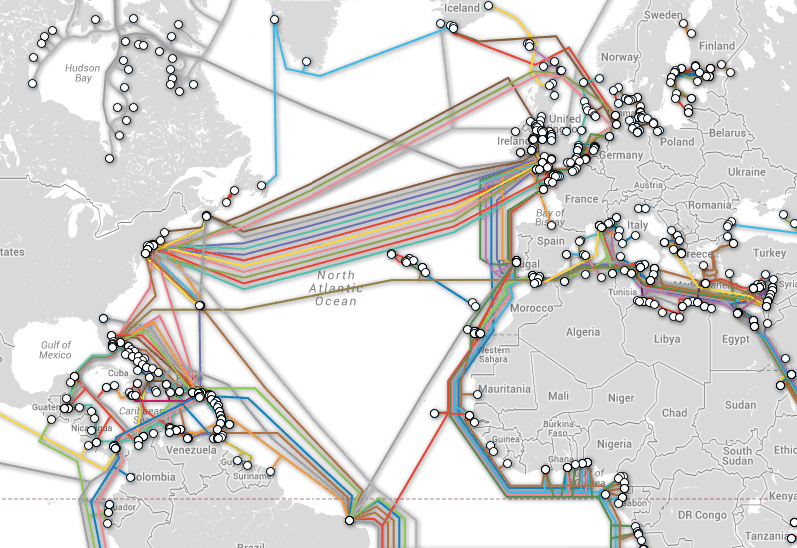

40 maps that will help you better understand where the internet came from, how it works, and how it's used by people around the world.

-Timothy B. Lee

Talk: Why you need to care about privacy, even if you have nothing to hide.

- TED

Gender, privacy and digital security manual.

- Tactical Tech

How to communicate online in a way that’s private, secret and anonymous.

- The Intercept